Different approaches to Detection as Code

Introduction

While I spend most of my “SIEM time” in Microsoft Sentinel these days, I believe it’s important to understand how the product field in general approaches certain key aspects of Security Operations.

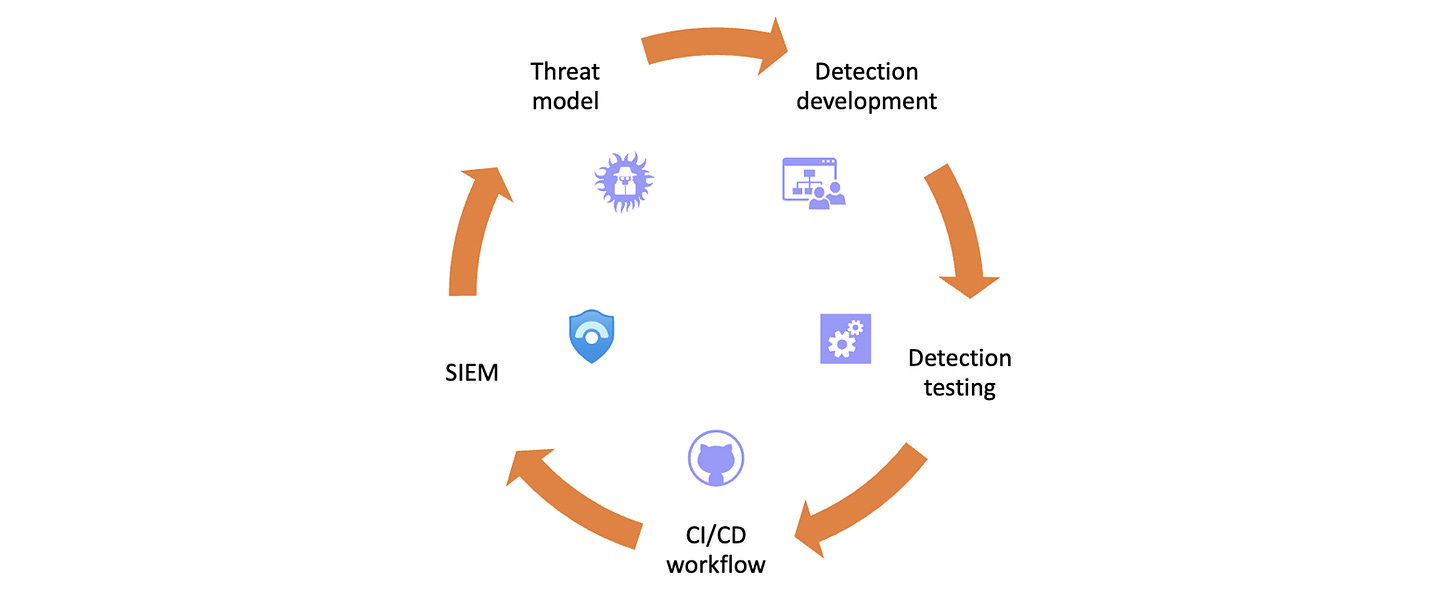

So, I decided to take a look at a few modern cloud-based SIEM products once more. This time focusing on how they approach Detection as Code and CI/CD workflows.

Detection as Code is a key differentiator between SecOps and SIEM practices of the past and present. It’s a modern approach to building threat detection, with a structured process that lets the whole team participate in building and improving rules for SIEM, sometimes also for XDR.

No more “we just trust whatever the vendors give us” or “everyone has their own way of writing, testing and deploying rules”. Create a repeatable workflow, where the whole team take part in identifying and creating needed detections.

So, how do modern SIEMs help us with this? Let’s take a quick look at three different products.

Product comparison

There would be other products to look at as well, but for this article the following are enough. I included Panther here as a refrence as well, as I have lately been following it in addition to Microsoft Sentinel and Google Chronicle.

Microsoft Sentinel

Native API and built-in Repositories feature with support for deploying from Azure DevOps and GitHub.

Also deploys other configuration, including automation Playbooks.

Detection language: ARM templates and KQL.

Documentation: https://learn.microsoft.com/en-us/azure/sentinel/ci-cd?tabs=github

Google Chronicle

Native Detection Engine API.

No built-in deployment feature.

Detection language: YARA-L.

Documentation: https://cloud.google.com/chronicle/docs/reference/detection-engine-api

Panther

Native API and deployment support with CircleCI. Also has a testing feature out-of-the-box for the pipeline.

Detection language: Python.

Documentation: https://docs.panther.com/panther-developer-workflows/ci-cd-onboarding-guide

Maybe some day I will do a more thorough live testing of these different approaches, but for now I am just taking this comparison as a quick start for identifying maturity and possible issues for detection engineering in Microsoft Sentinel, as that is the SIEM I spend my time in.

Sentinel perspectives

From a Microsoft Sentinel perspective, I think support for detection as code and CI/CD workflows has two different points of view.

The Repositories feature is a great way to get started and build a working detection pipeline with minimal configuration. Also I appreciate that Repositories has support for maintaining other configuration than just detections.

But honestly, when it comes to actually writing rules, I think the competition is ahead. ARM templates are a terrible language for writing SIEM content (there, I said it!). And when you add the dual complexity of “ARM + often very long KQL queries” to the mix, the problem just gets bigger.

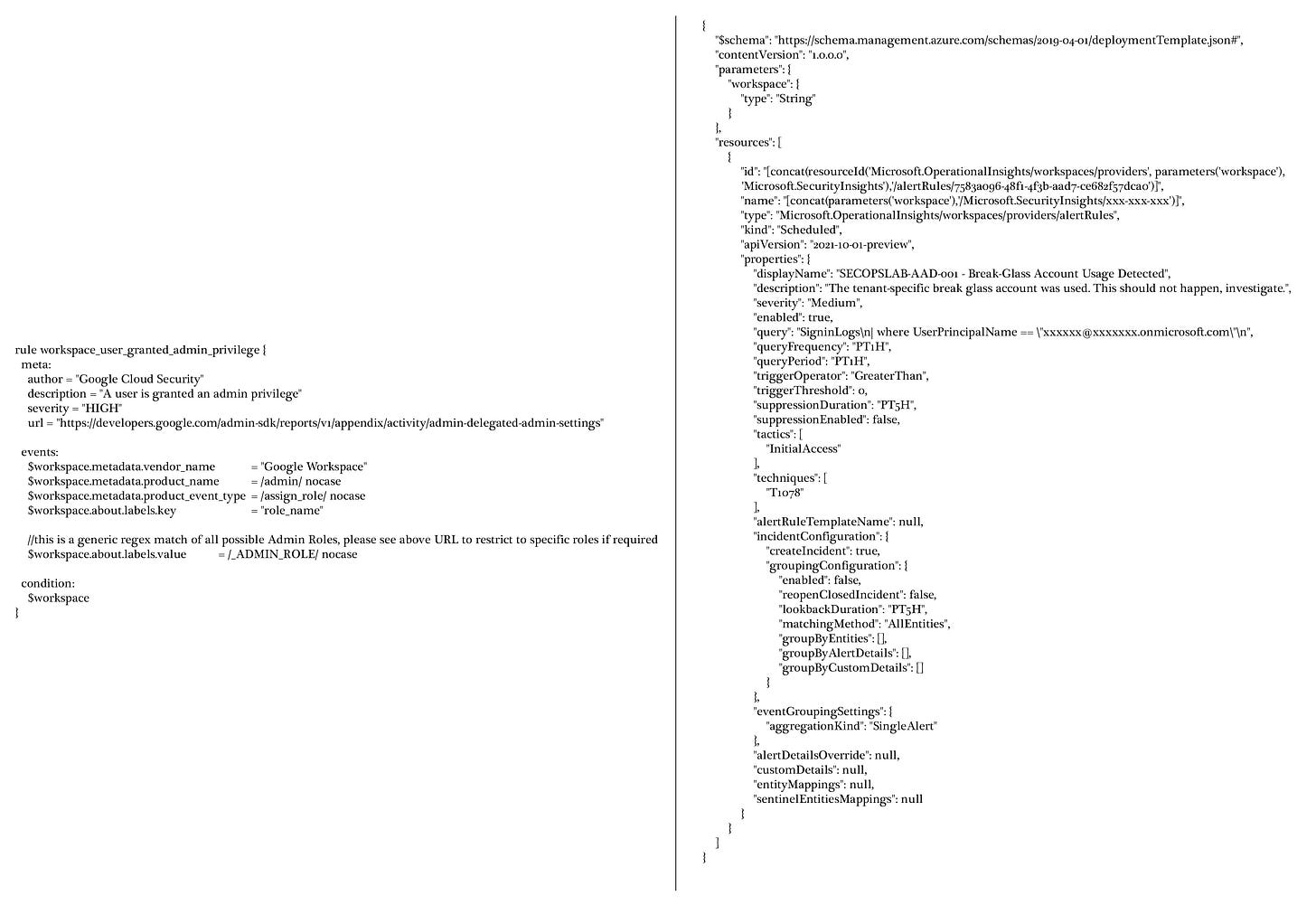

For comparison, below are rules with similar detection complexity in Google Chronicle and Microsoft Sentinel. YARA-L vs. ARM & KQL.

Which would be easier to discuss in a detection sprint meeting with the team?

Can we just stop for a moment to appreciate how elegant YARA-L is. 🤩 One language for both the query and the metadata, no extra fluff.

My dream is that one day we have something like SIGMA in Sentinel. A language that unifies all components in the rule to one format. But I feel this is an unlikely thing to actually happen, as we are so dependant on KQL for almost everything in Sentinel.

Meanwhile - how can Sentinel users make the most with what we have at the moment?

What can we learn?

I think the lesson we can learn from other products at the moment is that writing clean and easy to understand detections is an important goal. And it’s worth the time to think about how your team approaches writing detection content. How can the rules you write be the most simple and easy to understand?

And if we find there are certain problems with this in Sentinel, we can also find ways to make the code easier to work with. 👍

In general this likely means that you build mockups without the official template and query language during the first stages of development. Identify what you need to search, what are the keywords and lookups you need, and write down the basic idea for discussion with the team.

When proceeding to the actual rule building and KQL, I think the key is to cut all unnecessary clutter. Don’t make the query any more complex than you absolutely must. Use the minimum amount of data and minimum amount of outputs that automation or human analysts need for response.

As to the ARM template side… Well I think there are two choices:

Either accept the situation as it is, which likely means you focus on just the KQL query when doing detection development and have some in-house taxonomy for writing the metadata in your work items during development sprints. Then create the actual ARM template later, when you need to test the detection.

Or you look at writing the rules with YAML and doing a conversion during CI/CD workflow. There is an example of this in the Sample Content Repository.

Anyway of doing it, just remember that there are a lot of steps in the development process where you don’t need to be constrained by ARM and KQL:

In many steps, we can have our focus outside of the actual languages. What data we have, how do we find threats, what happens during response? Keep your eyes on these, and then make sure the end result works in the technical deployment process.

Looking forward to continuing with this theme in more posts.